A flaw of many web designs is that they only focus on the primary users (humans) and think about the secondary users (robots) as “out of sight, out of mind”. It’s hard to see (even with analytics tools) all those robots.

Since building your site for humans is pretty obvious, this Golden Rule will focus on the not-so-obvious users, building your site for robots.

With no instructions, search engine robots (or, search engine spiders) start crawling every page they can sniff out from your domain. These spiders will most likely treat every page the same, often giving no preferential treatment to most important pages vs. least important pages. Unless, of course, you give these robots instructions.

Start with an XML Sitemap.

A human sitemap helps visitors see all the pages of a website. An XML sitemap does the same thing for the robots. XML sitemaps are easy to create by using sitemap generators. A quick Google search can point you in the right direction.

Restrict robot access to certain pages to increase SEO results with a Robots.txt file.

Think of robot as a new employee. If you think the new employee is going to find everything they need without guidance to what is most important and what is least important, that employee is going to be very ineffective. Left to crawl on its own, the unguided robot will most likely focus as much on the troublesome areas of your website as it does the most important points. Restricting access to junk and hazards while making quality choices easily accessible is an important and often overlooked component of SEO. The robots exclusion protocol (REP), or robots.txt, is a text file webmasters create to instruct robots, typically search engine robots, how to crawl and index pages on their website – and what NOT to crawl, making this an easy but effective addition to any website.

Using a Canonical URL

Yes, it’s a bit of a strange word (Canonical, or as Google calls it, Canonicalization), but it’s worth understanding and making sure your site is properly coded for these robots. Canonicalization is simply the process of picking the best URL when there are several to choose from, and usually refers to home pages. For example, most people would consider these the same URLs:

- www.goldencomm.com

- goldencomm.com/

- www.goldencomm.com/default.aspx

So how do you make sure that Google picks the URL?

First, start by using that URL consistently across your entire site. For example, don’t make half of your links go to http://goldencomm.com/ and the other half go to http://www.goldencomm.com. Instead, pick the URL you prefer and always use that format for your internal links.

Next, configure your web server so that if someone requests http://goldencomm.com/, it does a 301 (permanent) redirect to http://www.goldencomm.com. That helps robots know which URL you prefer to be canonical. Adding a 301 redirect can be an especially good idea if your site changes often (e.g. dynamic content, a blog, etc.).

Of course, make sure you’re using the proper meta-tags throughout the site.

For the purpose of this Golden Rule, we’ll focus on the three meta tags that are most commonly asked about.

Title Tags – the mother of all meta tags and the easiest and most important single SEO tweak you can make. Title Tags have a real impact on search rankings and, perhaps just as importantly, are the only one of the tags we’ll discuss here that are visible to the average user. You’ll find them at the top of your browser (for organic search pages or for PPC landing pages):

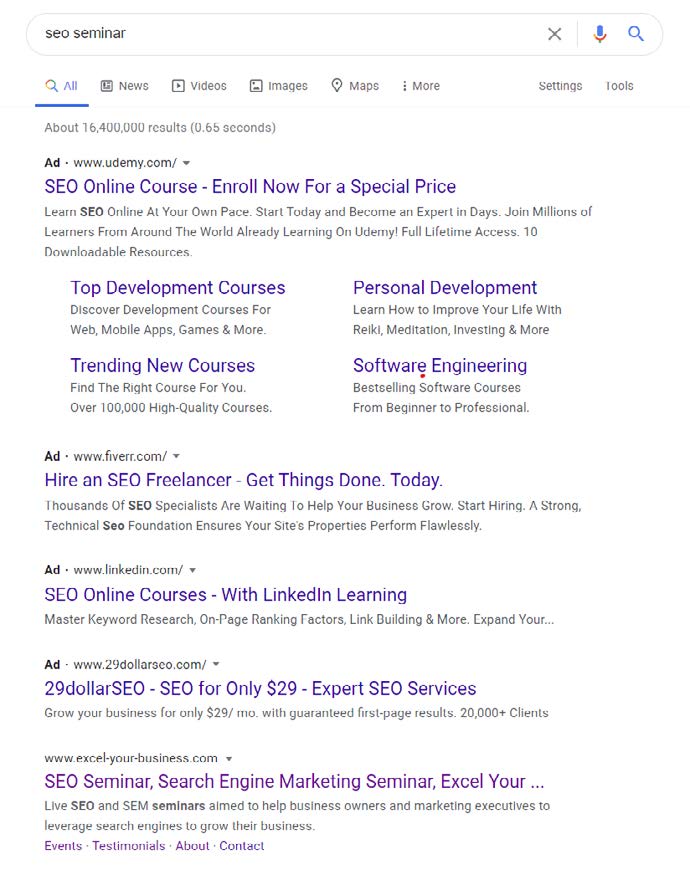

Meta Description Attribute - can be a useful meta tag as it very simply explains to searchers what your page is about. Let’s say you were googling the phrase “SEO seminar”. You might encounter the following results:

The meta description tag is a snippet used to summarize a web page’s content. While it does NOT help in SEO results, it helps a ton for click-through rates as it lets the visitors know what a page is about before they click on it.

Alt Tags - (or Alt Attributes) are “alternative text” for an image. Alt tags are rarely seen by people (only SEO-type nerds like us) reading your website, but the robots sure like them, and one way to “search” on Google is via images. So properly naming those images can be VERY important.

Conclusion

As more websites come online every day and the world gets nosier and nosier, the robots need you to clearly articulate who you and your company are and what you do. By providing structure to the chaos of the web, metadata helps robots (and people) find what they want – and gives your content the edge in the world of search.